Z-Image

Lightweight Image Generation Engine

Z-Image is a lightweight image generation tool featuring an efficient 8-Step inference architecture. It delivers fast, high-quality AI image generation on consumer-grade GPUs while significantly reducing computational costs.

Dimensions

Example Showcase

Cinematic Jazz Saxophonist

Tokyo Rainy Night Street Documentary

The Artisan Watchmaker

Tang Dynasty Hanfu Portrait

High-Fashion Texture

Studio Ghibli Illustration

Vintage Movie Poster "The Taste of Memory"

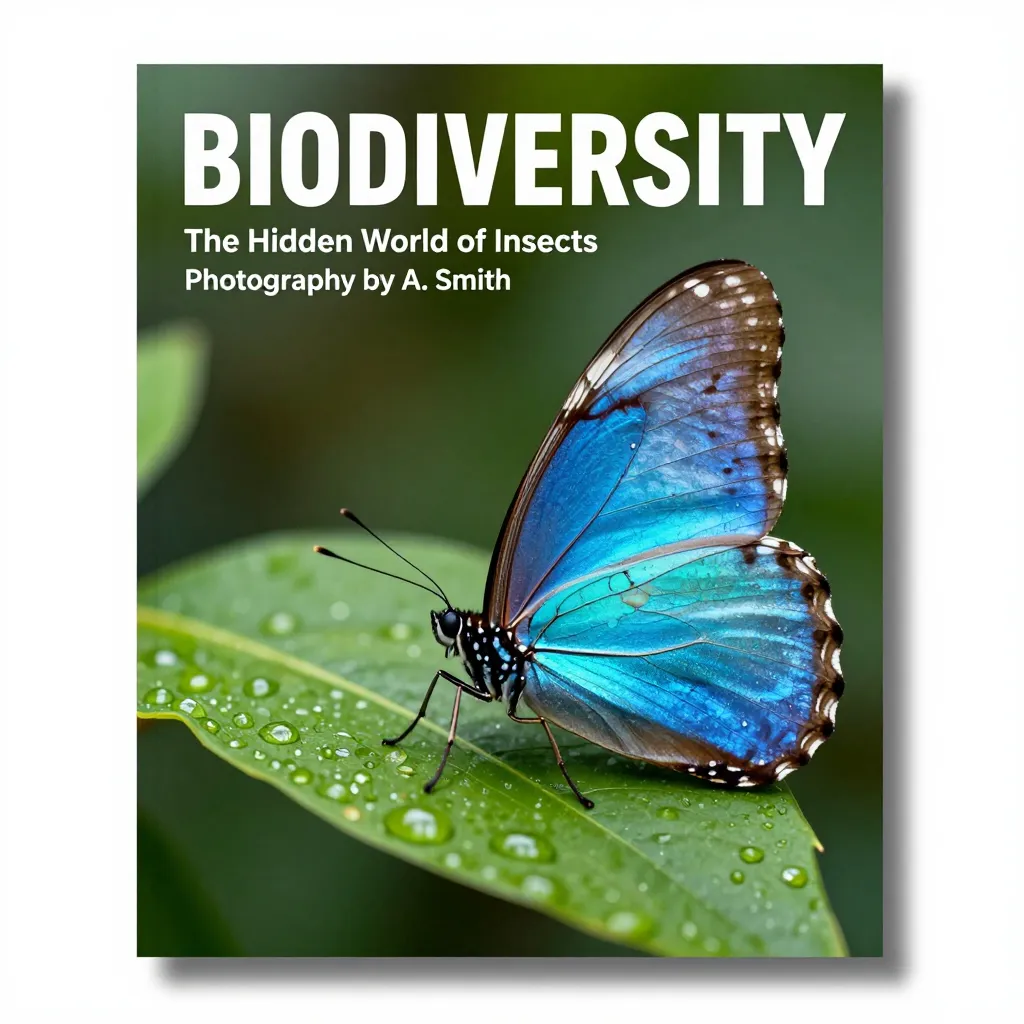

Nature Magazine Cover

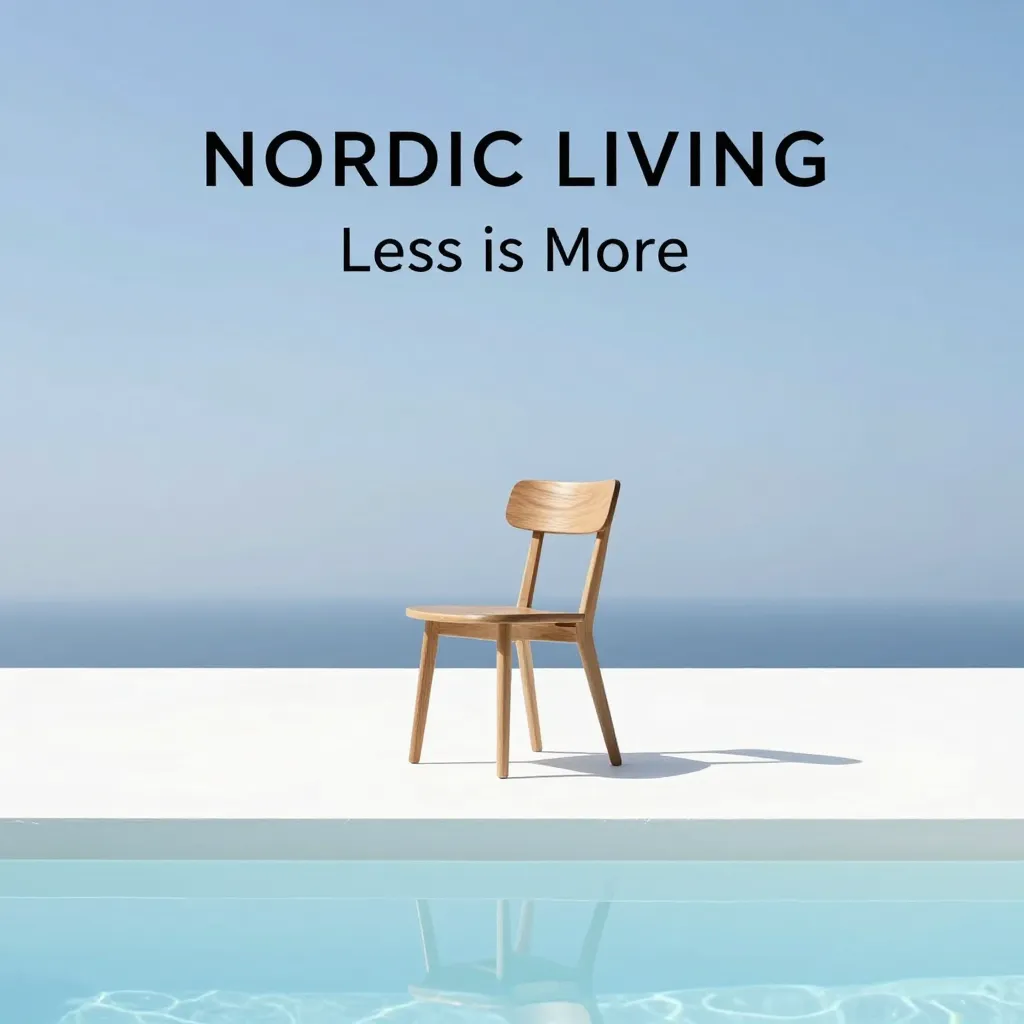

Minimalist Chair Poster Design

Not Just Fast, It's Fully Evolved

Filling the gap between lightweight and massive models, Z-Image-Turbo finds the perfect balance between speed, quality, and usability.

Native Bilingual Support

Powered by Qwen 3.4B LLM. No more garbled Chinese characters. Calligraphy, signage, and complex typography are rendered precisely.

S3-DiT Single Stream

Radical architectural innovation. Text and image tokens are processed consistently, similar to GPT-4, utilizing every parameter for both generation and understanding.

Apache 2.0 License

True open-source freedom. Unlike Flux.1's commercial restrictions, you are free to use it commercially, modify, and integrate. Ideal for startups and game studios.

S3-DiT: Breaking Modal Barriers

Traditional models use a "dual-stream" architecture. Z-Image-Turbo adopts Scalable Single-Stream Diffusion Transformer (S3-DiT).

- Unified Input Stream: Text Tokens and Image Latents are concatenated directly.

- Full Parameter Interaction: Every Transformer layer performs deep text-image attention calculation.

- Decoupled-DMD: The core algorithm that compresses inference to just 8 steps.

- CFG Enhancement: Independently optimized guidance signals for sharp images without high CFG values.

Why Choose Z-Image-Turbo?

We provide the optimal solution balancing performance, cost, and ecosystem.

| Metric | Z-Image-Turbo | Flux.1 (Dev) | SDXL Base |

|---|---|---|---|

| Parameters | 6B (Balanced) | 12B (Massive) | 2.6B |

| VRAM Req | 12GB (Native BF16) | 24GB+ (or Quant) | 8GB |

| Steps | 8 Steps (Distilled) | 20-50 Steps | 20-50 Steps |

| Text Encoder | Qwen 3.4B (Bilingual) | T5 + CLIP | OpenCLIP |

| Typography | ⭐️⭐️⭐️⭐️⭐️ Perfect | ⭐️⭐️ Poor | ⭐️ Garbled |

| License | Apache 2.0 | Non-Commercial | OpenRAIL++ |

| Cost/Img | ~$0.0029 | High | Low |

A Boon for Consumer Hardware

Thanks to the 6B parameter scale and 8-step distillation, Z-Image-Turbo achieves 2-3s generation on RTX 3090/4090. For enterprise H800s, sub-second response is reality.

Quick Start

# Quick load with Diffusers

from diffusers import DiffusionPipeline

import torch

# Load 8-Step Turbo Model

pipe = DiffusionPipeline.from_pretrained(

"Tongyi-MAI/Z-Image-Turbo",

torch_dtype=torch.bfloat16

).to("cuda")

# Generate Image

image = pipe(

prompt="Cyberpunk detective, rainy night, neon lights, Chinese sign saying "Tongyi Lab"",

num_inference_steps=8,

guidance_scale=1.0 # Distilled models don't need high CFG

).images[0]

Frequently Asked Questions

Questions about deployment, usage, and licensing.

GPU requirements?

For native precision (BF16), 16GB VRAM (RTX 4080/3090) is recommended. With GGUF/NF4 quantization, 8GB VRAM cards (RTX 3060) run smoothly with minimal quality loss.

Can I use it commercially?

Yes. Z-Image-Turbo uses the permissive Apache 2.0 license. You can use it freely for commercial products without fees.

How to write Chinese prompts?

Just like chatting naturally. Thanks to Qwen 3.4B, you can use complex sentences, idioms, or poems. For text rendering, wrap specific text in quotes.

Support for ComfyUI / WebUI?

Yes. ComfyUI has Day-0 support (update to latest). Automatic1111 support is in the dev branch and coming soon.

Advantage over Flux.1?

Z-Image-Turbo solves efficiency and usability. While Flux is great for extreme quality, Z-Image offers 3x speed, half VRAM usage, and superior Chinese support.